Transition-Informed Reinforcement Learning for Large-Scale Stackelberg Mean-Field Games

Synopsis

This technology is designed for scenarios where one leader incentivises a large population of followers to maximise their utility. Potential applications include fleet management, such as e-hailing driver re-positioning, online ad auctions and elections.

Opportunity

Solving large-scale multi-agent problems remains a formidable challenge in urban planning. This technology can efficiently solve large-scale Stackelberg mean-field games (SMFGs) that model various real-world applications, such as fleet management and online ad auctions. Traditional multi-agent reinforcement learning (RL) methods are typically limited to a small number of agents and suffer from scalability issues. In contrast, this technology can scale to hundreds or even thousands of agents.

Technology

Many real-world scenarios, such as fleet management and online ad auctions, can be modelled as SMFGs, where a leader incentivises numerous homogeneous, self-interested followers to maximise their utility. Traditional model-free RL methods often suffer from data inefficiency, as the data is ignored after each update of the agent policies.

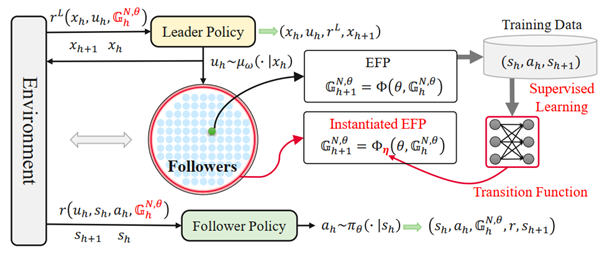

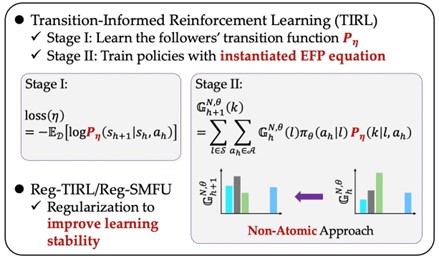

To efficiently leverage past experiences, Transition-Informed Reinforcement Learning (TIRL) introduces a model to capture the evolution of followers, facilitating the update of agent policies. The model is a neural network that takes the current state and action of an agent as input and outputs the probability distribution over the state space. This learned model quickly obtains the new state distribution of the followers in a non-atomic way, significantly improving scalability. Furthermore, this technology employs regularisation techniques to stabilise the learning process, achieving superior performance.

Figure 1: Overview of Transition-Informed Reinforcement Learning (TIRL) framework.

Figure 2: Technical details of TIRL.

Applications & Advantages

Main applications include fleet management, online ad auctions, airline price analysis, elections and stock trading.

Advantages:

- Efficiently solves large-scale multi-agent problems in urban planning and beyond

- Scales to hundreds or thousands of agents

- Leverages a neural network model to predict followers' state distributions

- Uses regularisation techniques for stable learning

- Provides a scalable, data-efficient solution for optimising complex systems involving numerous interacting agents

.tmb-listing.jpg?Culture=en&sfvrsn=38779dd7_1)

.tmb-listing.jpg?Culture=en&sfvrsn=462ec612_1)

.tmb-listing.jpg?Culture=en&sfvrsn=246046da_1)