Collection and Transfer of Synthetic Point Cloud for the Understanding of LiDAR Point Cloud

Synopsis

This invention introduces a pipeline for collecting and translating synthetic point clouds by constructing virtual 3D environments, embedding interested 3D objects, and collecting points with LiDAR simulators. The translated point clouds can be used to train 3D perception models like real point clouds.

Opportunity

Point clouds offer unique advantages in depth perception and 24-hour operation without being affected by lighting conditions. They have diverse applications in perception and navigation tasks such as remote sensing, autonomous vehicles and robot navigation. However, collecting and annotating large-scale LiDAR point cloud data is laborious, time-consuming, and prone to errors. This challenge is exacerbated in dense prediction tasks such as object detection and semantic segmentation, which require dense annotations of point-cloud data at the point or region level.

Automated generation of synthetic point clouds provides a solution to this data challenge. By using 3D assets such as 3D object models and virtual environments, it is possible to employ LiDAR simulators to generate large-scale point-cloud data for various semantic classes. Although synthetic point clouds differ in features and distributions as compared with real point clouds, they are valuable for pre-training and fine-tuning 3D perception models with minimal real data. In addition, translation networks can be designed to adjust the style and appearance of synthetic point clouds, making them more suitable for training deep networks to handle real point clouds.

Technology

The SynLiDAR dataset, a large-scale multi-class synthetic LiDAR point cloud dataset, contains over 19 billion annotated points of 32 semantic classes. This dataset was created using multiple virtual environments and 3D object models of various semantic classes, obtained either from professional artists or online sources. The synthetic point clouds were collected using an off-the-shelf LiDAR simulator that mimics real LiDAR sensors.

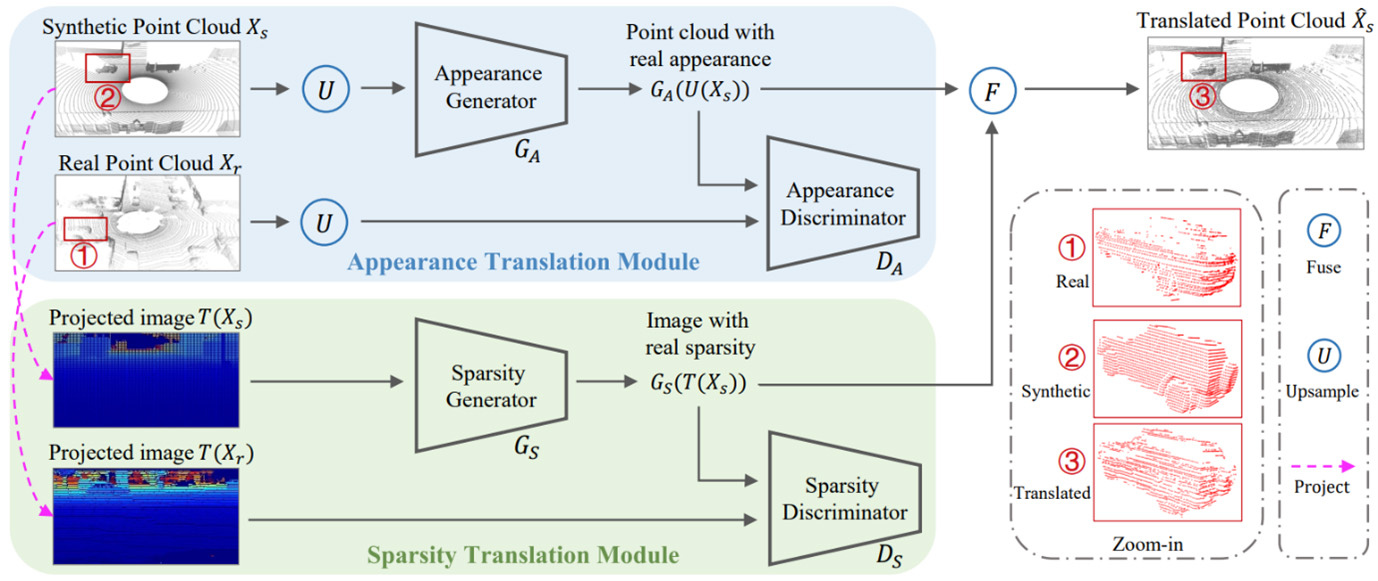

To address the differences in appearance and sparsity between synthetic and real point clouds, a point cloud translator (PCT) network was designed. This network translates synthetic point clouds to make them more useful for training deep networks on real point clouds. The PCT decomposes the domain gap into appearance and sparsity components, handling them in separate network branches. Experiments show that the translated point clouds closely resemble real point clouds in appearance and feature distribution, effectively augmenting real point clouds for training various point cloud networks.

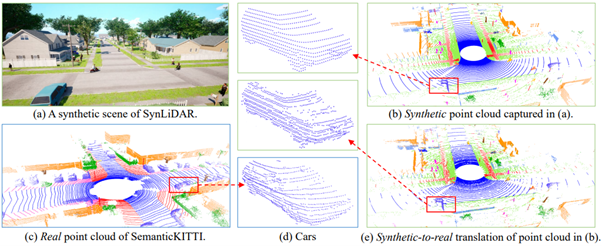

Figure 1: We create SynLiDAR, a large-scale multiple-class synthetic LiDAR point cloud dataset as illustrated in (b). SynLiDAR contains over 19 billion annotated points of 32 semantic classes which was collected by constructing multiple virtual environments and 3D object models as shown in (a). To make synthetic point cloud more useful for handling real-world LiDAR point cloud as shown in (c), we design a point cloud translator (PCT) that translates synthetic point cloud by decomposing the domain gap into an appearance component and a sparsity component. The translated data in (e) has a closer distribution as real point cloud and is more effective in processing real point cloud. The close-up views in (d) show the translation effects.

Figure 2: PCT disentangles point-cloud translation into appearance translation and sparsity translation tasks. Given synthetic point cloud, the appearance translation first learns to reconstruct dense point cloud that have similar appearance as real point cloud. The sparsity translation then learns real sparsity distribution in 2D space and fuses it with the reconstructed point cloud in 3D space. The final translation has similar appearance and sparsity as real point cloud as illustrated.

Applications & Advantages

Main application areas include remote sensing, autonomous-vehicle navigation and robot guidance.

Advantages:

- Utilises synthetic LiDAR point clouds to reduce the need for labour-intensive, error-prone manual data annotation.

- Enhances the efficiency of training deep-learning models for perception and navigation tasks.

- Improves the utility of synthetic data by aligning its appearance and sparsity with real point clouds.

- Enables effective network training and fine-tuning with minimal real data requirements.

/enri-thumbnails/careeropportunities1f0caf1c-a12d-479c-be7c-3c04e085c617.tmb-mega-menu.jpg?Culture=en&sfvrsn=d7261e3b_1)

/cradle-thumbnails/research-capabilities1516d0ba63aa44f0b4ee77a8c05263b2.tmb-mega-menu.jpg?Culture=en&sfvrsn=1bc94f8_1)