Faster LSM-Tree Structure Implemented on RocksDB

Synopsis

Log-structured merge-trees (LSM-trees) are essential for managing vast amounts of data in computing environments, making them vital for big data applications. Opportunities exist to enhance the efficiency of LSM-trees, which is crucial in sectors like e-commerce and finance. This invention introduces Moose, built on RocksDB, achieving approximately 2x faster performance on average.

Opportunity

The LSM-tree is a specialised data structure crucial for managing data in large-scale computing environments. Designed to handle vast amounts of data efficiently, LSM-trees are integral to the performance of databases, especially those that are write-intensive. Optimising LSM-tree performance is increasingly important as data volume and velocity grow, presenting opportunities to enhance efficiency and speed.

Improving LSM-tree performance can lead to faster data retrieval and more efficient storage, enhancing the overall speed and responsiveness of big-data applications. This is particularly beneficial in sectors such as e-commerce, where quick data processing translates to better customer experiences, as well as in finance, where real-time data analysis is essential for informed decision-making. This invention presents Moose, a new LSM-tree structure built on RocksDB, which achieves approximately 2x faster performance compared to the original RocksDB.

Technology

This invention comprises two main components designed to enhance the efficiency of LSM-tree structures within RocksDB, a leading storage engine. The first component is a new mathematical model that broadens design options for the LSM-tree, utilising optimisation techniques to minimise system operating costs effectively. The second component involves implementing and optimising this new LSM-tree design practically. The compaction policy has been redesigned to segregate data into 'hot' (frequently accessed) and 'cold' (rarely accessed) segments, ensuring consistent read and write efficiency. Additionally, a novel bloom filter has been introduced to enhance point lookup performance, making data retrieval faster and more efficient. These innovations have been rigorously tested against various benchmarks, consistently outperforming RocksDB and demonstrating significant efficiency improvements.

Figure 1: An illustration for the largest three levels of the default Moose, in which, SR and RM stands for size ratio and run magnification, respectively. The size ratio is configured as {9, 6, 5} corresponding to a run number of {3, 2, 2}.

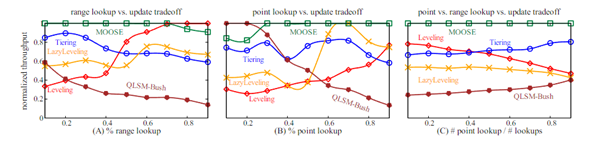

Figure 2: Evaluating the trade-off between different operations. Moose outperforms all the other baselines in most workloads.

Applications & Advantages

Application areas include NoSQL databases that handle high write throughput scenarios, such as Cassandra and WireTiger, as well as search engines (Apache Lucene), streaming computation systems (Apache Flink and Kafka) and cloud-storage systems (BigTable and AWS S3).

Advantages:

- Enhanced Efficiency: Achieves approximately 2x faster performance compared to the original RocksDB.

- Optimised Compaction Policy: Segregates data into 'hot' and 'cold' segments for consistent efficiency.

- Improved Point Lookup Performance: Novel bloom filter for faster data retrieval.

- Broad Applicability: Suitable for various big data and high-throughput scenarios.