Fault-Tolerant Technique for Deep Learning Accelerator Design

Synopsis

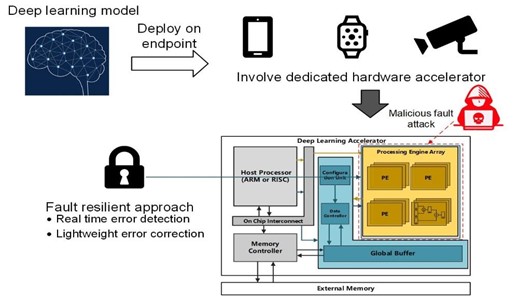

This technique enables a lightweight, error-resilient and hardware-efficient implementation of pre-trained deep convolutional neural network models. A robust and fault-tolerant deep neural network (DNN) accelerator is crucial for edge artificial intelligence (AI) applications.

Opportunity

Fault-injection attacks on DNN hardware accelerators for real-world object recognition and classification highlight the need for fault resiliency in safety and security-critical applications. Existing error correction methods for arithmetic operations, such as error correction codes, are not applicable, and duplicating functional units for majority voting is too costly. Current shadow register-based solutions require stalling execution to flush the multiply-accumulate (MAC) pipeline, resulting in significant throughput and power consumption overheads.

This technology offers a hardware design method for DNN convolution operations that provides efficient and timely error correction, preventing prediction accuracy degradation due to both natural errors and maliciously injected faults. This method can be applied to the convolutional layers of any pre-trained DNN model for implementation on application-specific integrated circuit (ASIC) and field-programmable gate array (FPGA) platforms, increasing robustness against fault-injection attacks without impacting throughput.

Technology

The convergence of AI and Internet-of-Things (IoT) introduces new security challenges for endpoint device deployment. Data analytics at the edge is vulnerable to fault injection attacks due to both physical and remote access to DNN operations by adversaries.

This solution can be implemented on low-cost edge computing platforms, allowing DNN computing errors to be corrected in real-time before causing misclassification or incorrect predictions. It maintains the throughput and accuracy of pre-trained DNN models under deliberate attacks, such as overheating, voltage sagging, clock glitching and overclocking. More importantly, it detects computing errors in real-time and restores correct computations without suspending the pipeline or requiring data replay.

This method is effective on any hardware architecture dominated by pipelined MAC operations, such as convolutional layers and batch normalisation in tensor processing units and machine learning accelerators. FPGA prototype evaluations show this MAC design technique can achieve 12.2% to 47.6% higher error-resiliency than existing methods under the same fault injection rates.

The technology owner is keen to license this hardware design to ASIC or FPGA design houses developing DNN accelerator integrated circuits.

Figure 1: Structure of a deep learning model.

Applications & Advantages

Main application areas include defect detection in manufacturing, traffic management in transportation, queue detection and automated checkout in retail, farmland monitoring in smart agriculture, and entertainment applications in virtual reality and augmented reality.

Advantages:

- Lightweight and hardware-efficient implementation.

- Significantly high error-resilience without throughput loss.

- Corrects errors in real-time without pipeline suspension or data replay.

- Applicable to various DNN models and hardware platforms.

.tmb-listing.jpg?Culture=en&sfvrsn=3b74ec1c_1)

.tmb-listing.jpg?Culture=en&sfvrsn=414f0d90_1)