ROWBACK: Robust Watermarking of Neural Networks with BACKdoors

Synopsis

ROWBACK offers a robust watermarking technique for neural networks, securing intellectual property (IP) in machine learning. It is vital for machine learning as a service (MLaaS) providers and sectors like healthcare, ensuring ownership proof and protecting investments against unauthorised use.

Opportunity

ROWBACK presents a revolutionary approach to secure IP in the rapidly evolving machine learning landscape, addressing the critical challenge of proving ownership over trained neural networks. This is essential for stakeholders who incur significant expenses in data curation and computing infrastructure for model development. By leveraging unique properties of neural networks, such as adversarial examples and backdoor embedding, ROWBACK introduces a robust watermarking technique that ensures verifiable and durable proof of ownership. This technology is particularly advantageous for MLaaS providers, companies deploying models publicly, and sectors like healthcare where model accuracy depends on costly datasets. ROWBACK promises to protect investments and prevent unauthorised model usage, offering a solid foundation for IP rights enforcement and model licensing.

Technology

Claiming ownership of trained neural networks is critical for stakeholders investing heavily in high-performance neural networks. The entire machine learning pipeline, from data curation to high-performance computing infrastructure for neural architecture search and training, incurs substantial costs. Watermarking neural networks is a potential solution, but standard techniques suffer from vulnerabilities demonstrated by attackers. ROWBACK provides a robust watermarking mechanism for neural architectures by leveraging two properties of neural networks - the presence of adversarial examples and the ability to trap backdoors in the network while training.

ROWBACK ensures strong proofs of ownership through the following:

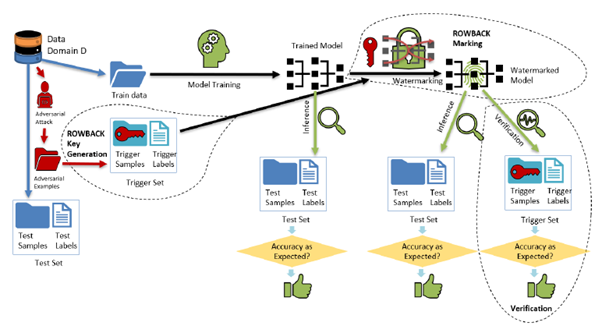

- Re-designed trigger set: Makes use of adversarial examples of the models and associates trigger labels to them, customising the trigger set to ensure preservation of functionality, embedding strong watermarks that are extremely difficult to replicate.

- Uniform distribution of watermarks: Embeds watermarks uniformly throughout the model by marking every layer with backdoor imprints, preventing model modification attacks by making network theft computationally equivalent to training a new network from scratch.

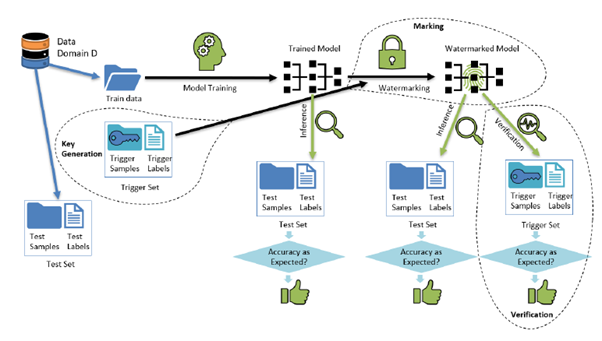

Figure 1: Schematic diagram of watermarking using backdooring.

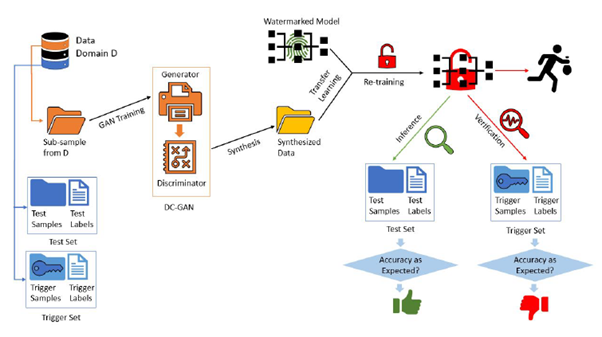

Figure 2: Model modification attack using synthesis.

Figure 3: Schematic diagram of ROWBACK.

Applications & Advantages

Main application areas include MLaaS providers, companies deploying ML models in public domains and investors in high-accuracy models needing highly curated datasets, especially in the healthcare sector.

Advantages:

- Ensures the protection of IP rights over a trained neural network, necessary for licensing and preventing of unauthorised usage

- Verifiable proof of ownership of DNNs with watermarked neural networks that can be easily verified for ownership when required

- Robust against attacks that try to steal or extract models without authorisation