Inference and Prediction of Participant Behaviour with Entry-Flipped Transformer

Synopsis

This invention predicts people’s behaviours in the future based on observations of others. It can be applied to applications like video event generation and group activity training and scoring systems.

Opportunity

The model for participant behaviour inference and prediction treats a person’s behaviour as a combination of self-intention and social interaction, with the latter being crucial in group activities. Current group-related computer vision works do not adequately address scenarios with heavy social interaction among participants. The tasks of participant behaviour inference and prediction are modelled as a frame-wise sequence estimation problem. Existing methods often face error accumulation issues due to the recurrence or auto-regressive structure, which uses the output estimation from the previous step as input in the next step. The entry-flipping mechanism in this model limits error accumulation and improves recovery from spike errors. By altering the order of three entries (query, key and value) in an attention function, this model effectively limits accumulated errors and efficiently recovers from spike errors.

Technology

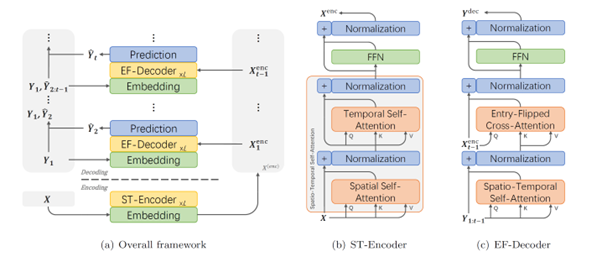

The EF-Transformer network includes feature embedding layer, Spatio-Temporal Encoder (ST-Encoder) layers, Entry-Flipped Decoder (EF-Decoder) layers and a prediction layer.

In the feature embedding layer, two fully connected layers are applied to the coordinates and action labels of participants to map them to higher-dimensional features. These features are concatenated with a positional encoding feature. The ST-Encoder contains two self-attention functions for spatial and temporal domains, respectively, as well as a feed-forward network (FFN) on top of the temporal self-attention function. The EF-Decoder consists of a spatio-temporal self-attention function, a cross-attention function with an entry-flipping mechanism, and an FFN. The spatio-temporal self-attention models the relation among different frames of all target participants. The cross-attention function captures the relations between target participants and observed participants within the same frame. Unlike typical transformers, the entry-flipping mechanism in the cross-attention function focuses more on social interactions instead of self-intentions. By sending ground truth features of observed participants to the query entry of the cross-attention, this model limits error accumulation. Finally, a prediction layer is built upon the output of decoders, mapping decoded features to coordinates and action label estimations for target participants.

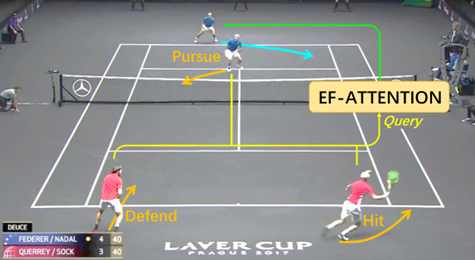

Figure 1: An example of the task of participant behaviour inference and prediction.

Figure 2: Framework of the EF-Transformer.

Applications & Advantages

Main application areas include group scene simulation for video games, enhancing non-player character behaviours to react appropriately to player actions, and group activity training, such as sports training or soldier tactics training.

Advantages:

- Limits errors accumulation in behaviour prediction

- Improves recovery from spike errors

- Focuses on social interactions using the entry-flipping mechanism

- Enhances realism in group scene simulations

- Analyses strategies in group activity training

.tmb-listing.jpg?Culture=en&sfvrsn=462ec612_1)